Today’s guest post is from Onur Solmaz, VP of Engineering and Research at TextCortex, who shares how his team leveraged Cloudfleet to revolutionize their development workflow.

Here at TextCortex, we’re building an enterprise-grade AI platform that enables organizations to securely deploy AI agents that automate high-value workflows. By connecting to company-wide knowledge, our platform enhances everything from content creation and data analysis to team collaboration. We are obsessed with building a seamless and powerful product, and that obsession extends to our own internal development processes.

As our team and ambitions grew, we faced two significant scaling challenges. Building an AI-first platform means running extensive testing suites and complex build processes that require significant computational resources. This led to our CI/CD costs climbing rapidly as we relied on GitHub’s default runners. Second, creating isolated preview deployments for each new branch was a complex, manual process. Our infrastructure was based on one of the serverless platforms in the market that did not give the flexibility we required to create replica environments as we create feature branches. We knew we needed a more powerful and cost-effective solution like Kubernetes, but as a team, we didn’t have deep Kubernetes administration experience. We needed a way to get the power of Kubernetes without the steep learning curve. That’s when we turned to Cloudfleet.

Our first win: slashing CI/CD costs with elastic runners

Our journey with Cloudfleet began with tackling our CI/CD costs. We were impressed that getting a Kubernetes cluster up and running took less than five minutes. From there, we switched from GitHub’s default runners to self-hosted GitHub runners, managed by the official open-source actions-runner-controller project. These runners are provisioned by Cloudfleet on Hetzner, a very cost-efficient European cloud provider. The entire migration was complete in less than two days, and this single change cut our CI costs by 80%. The elasticity of the Cloudfleet platform was key; it automatically scales our runner infrastructure up during peak working hours and scales it down to zero during nights and weekends.

We’ve taken this a step further by defining different hardware profiles for our runners. Compute-intensive jobs, like building our application, are automatically routed to powerful machines with more resources, while simpler, trivial jobs run on cheaper hardware. This is achieved using different GitHub Actions runner labels that correspond to different node types in our cluster. This level of granular control ensures we get the performance we need without overpaying for it.

From CI/CD savings to full preview environments

The incredible success and cost savings from our CI/CD migration motivated us to do more with Cloudfleet. We were so impressed that we decided to tackle our next big challenge: creating ephemeral preview environments for our development workflow.

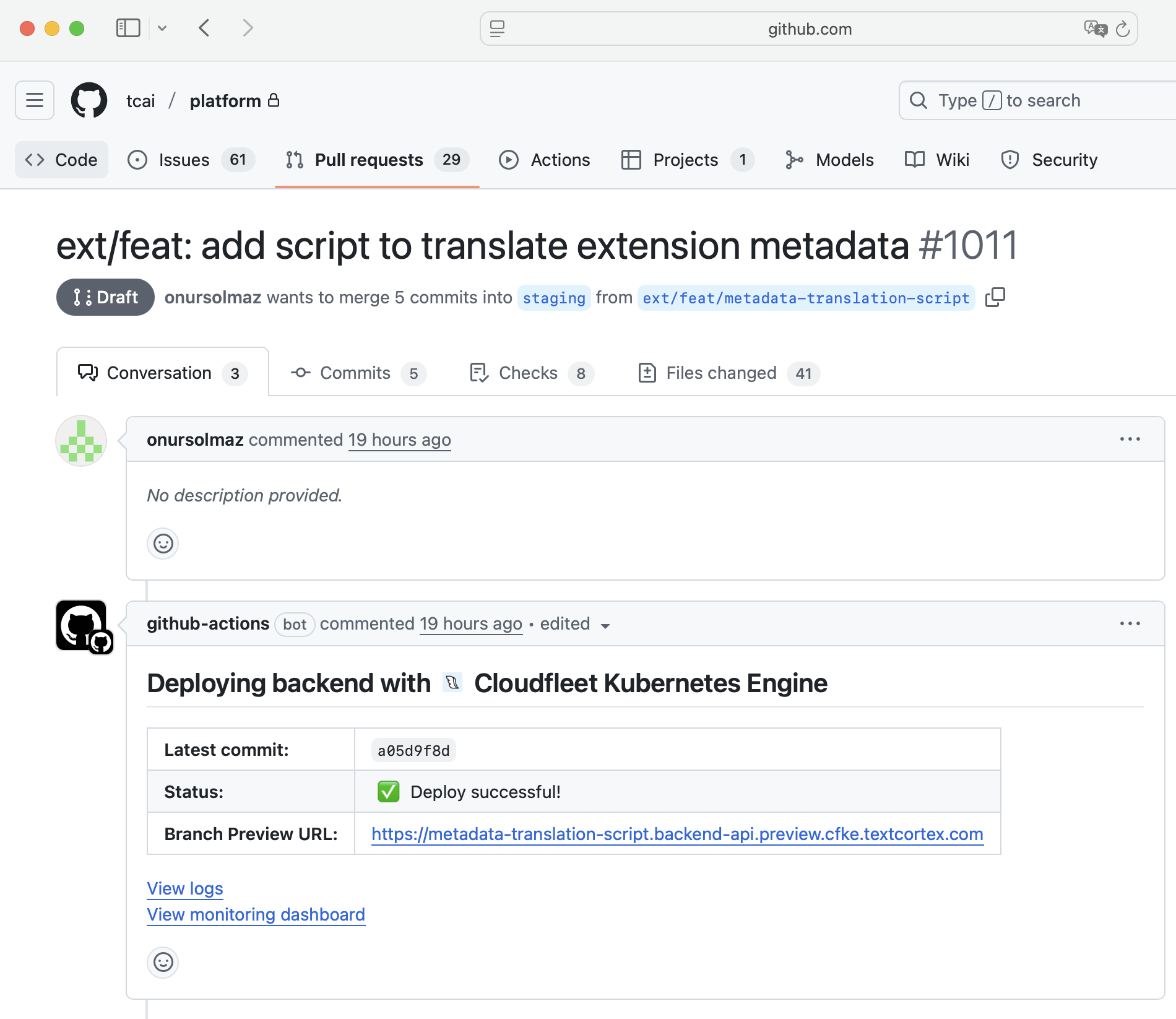

The solution we landed on was to manage these environments entirely through a standardized deployment process. Today, our workflow is completely transformed. Every time a developer pushes a new branch, a GitHub Action triggers our deployment process on the Cloudfleet Kubernetes Engine (CFKE) cluster. This process creates a unique and isolated environment for that branch.

This means every feature, bug fix, or experiment gets its own isolated, live environment with a predictable URL. The lifecycle is also automated: when a branch is deleted, a GitHub Action deletes the entire namespace where the feature branch is deployed, and the entire environment and its resources are instantly destroyed. We developed some custom actions for that and used the instructions at Cloudfleet tutorial to access our Cloudfleet cluster from GitHub Actions.

A deep dive into our environment automation

The real power of this approach is how our automation interacts with the features provided by the Cloudfleet platform.

Intelligent node provisioning with labels

One of the best features of CFKE is how it handles node provisioning. We don’t have to think about sizing our cluster. Instead, we define our scheduling requirements directly in our deployment configuration.

Snippet of our Kubernetes Deployment manifest, dynamically generated in the pipeline:

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: cfke.io/provider

operator: In

values:

- hetzner

- key: topology.kubernetes.io/region

operator: In

values:

- nbg1

- key: kubernetes.io/arch

operator: In

values:

- amd64

- key: node.kubernetes.io/instance-type

operator: In

values:

- cx42

- cpx41

Our deployment process takes simple configuration values and renders the nodeAffinity rule you see above. We tell Kubernetes that our pods for preview environments must run on the Hetzner provider, in the Nuremberg region, on amd64 architecture. To keep costs down, we target cx42 or cpx41 instance types, which use shared CPUs.

This level of flexibility is one of the key reasons we are looking forward to moving our production workloads to Cloudfleet as well. For production, we will be able to simply change the labels to request dedicated CPUs by specifying cfke.io/instance-family: ccx on the affinity rule.

In both scenarios, if a node matching all these labels isn’t available in the cluster, Cloudfleet’s node auto-provisioning kicks in and creates one for us. We define our infrastructure needs as code, and the platform provides the exact resources on-demand.

Dynamic routing with Istio VirtualService

To get dynamic routing for our preview environments, we needed a service mesh. We decided to install Istio ourselves on our Cloudfleet cluster, and the process was straightforward. Following the Cloudfleet documentation, particularly the tutorial at https://cloudfleet.ai/tutorials/cloud/install-istio/, made for a quick setup. Our self-managed Istio installation has been running reliably on the platform ever since.

We then handle getting a unique URL for each branch elegantly through our deployment automation. Our GitHub Actions pipeline automatically generates the host based on the branch name.

# From our virtualservice.yaml

apiVersion: networking.istio.io/v1

kind: VirtualService

metadata:

name: backend-api

spec:

hosts:

- {{.Release.Name}}.preview.example.com # Domain redacted

http:

- route:

- destination:

host: backend-api.{{ .Release.Namespace }}.svc.cluster.local

port:

number: 800

When our pipeline deploys a branch named feature/new-editor, the host is rendered as feature-new-editor.preview.example.com (domain redacted). Our Istio installation picks up this configuration and handles the rest, automatically routing traffic to the correct backend service for that branch.

Secure, keyless access to external cloud APIs

While our compute workloads run on Cloudfleet, we still consume many services, like storage buckets, from one of the large cloud providers. Initially, we planned to use static credentials to access these services from our Kubernetes pods. However, the Cloudfleet team pointed us to a much better, more secure solution: accessing resources without any static keys at all.

Following the Cloudfleet documentation at https://cloudfleet.ai/docs/workload-management/accessing-cloud-apis/, we configured a trust relationship between our Kubernetes cluster and our cloud provider’s identity system. This OIDC-based approach allows our Kubernetes service accounts to securely exchange a short-lived token for temporary cloud credentials. We were impressed with how seamlessly our workloads could access external services this way. It completely saved us from the compliance nightmare of rotating and securing long-lived cloud credentials.

The result: faster, safer, and happier developers

By combining our standardized configurations with the powerful automation features of the Cloudfleet platform, we’ve built a development workflow that is fast, secure, and incredibly efficient. Our team can now build and validate their work in parallel, leading to faster iterations and higher-quality code. The elasticity of the platform means we only pay for what we use. We’ve truly given our developers superpowers, and we couldn’t have done it without a platform that makes the complexities of Kubernetes feel effortless.